Introduction

The rise of generative AI has opened new possibilities for content creators, developers, and businesses to customize machine learning models for niche applications. By training your own AI model using custom prompts, you can fine-tune outputs to reflect specific styles, tones, domains, or user needs.

In this guide, we break down how to train or fine-tune your AI model step by step, including prompt engineering strategies and SEO best practices to ensure your content gets discovered effectively.

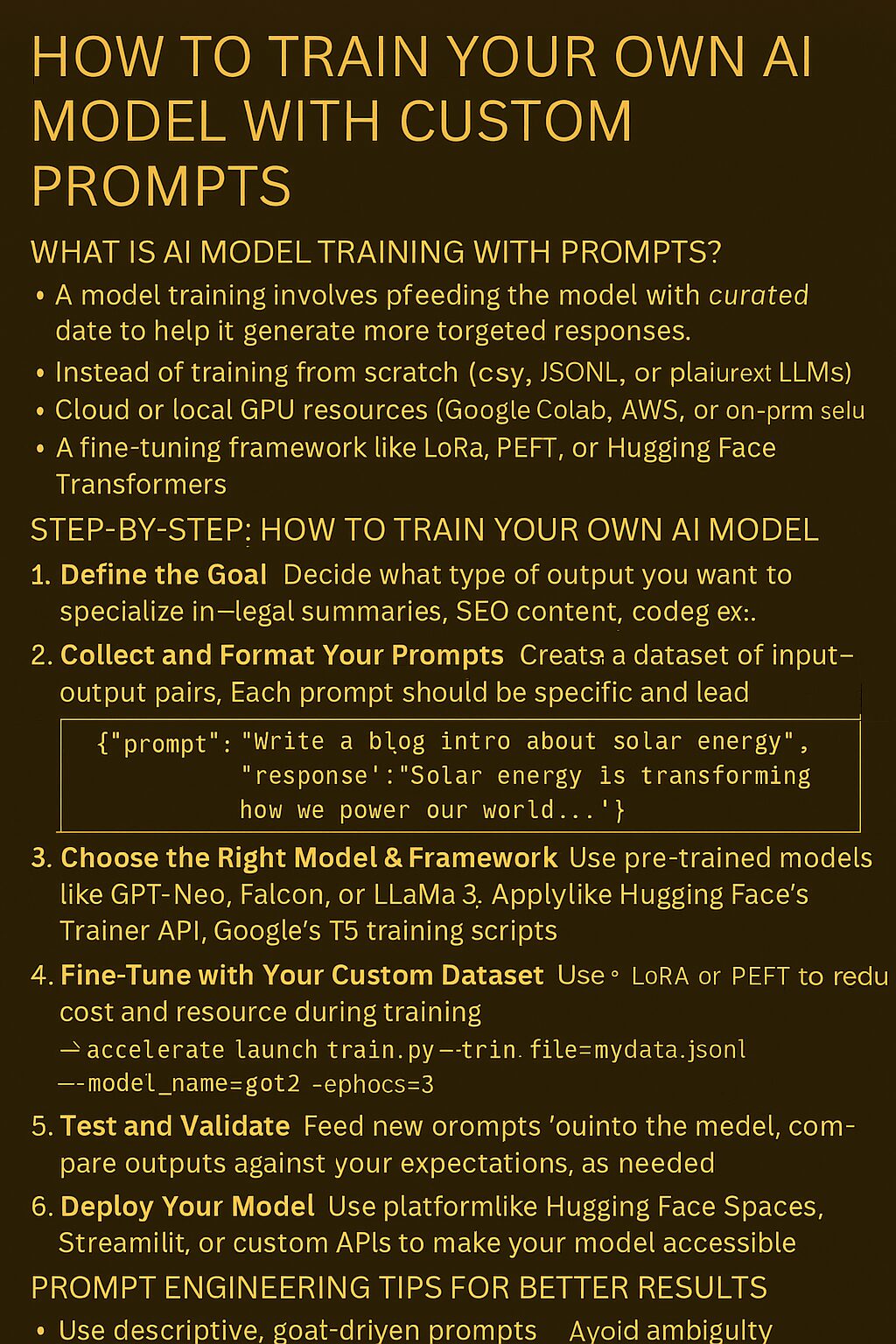

🧠 What Is AI Model Training with Prompts?

AI model training involves feeding a base model with curated data to help it generate more targeted responses. Instead of training from scratch (which requires significant data and resources), many users choose prompt-based fine-tuning or instruction tuning—a technique where custom input/output pairs help shape future AI behavior.

🛠️ Requirements Before You Begin

To train your AI model with custom prompts, you’ll typically need:

- A base model (such as GPT, LLaMA, or other open-source LLMs)

- A prompt-response dataset (CSV, JSONL, or plain text format)

- Cloud or local GPU resources (Google Colab, AWS, or on-prem setup)

- A fine-tuning framework like LoRA, PEFT, or Hugging Face Transformers

🔄 Step-by-Step: How to Train Your Own AI Model

1. Define the Goal

Decide what type of output you want to specialize in—legal summaries, SEO content, code generation, poetry, etc.

2. Collect and Format Your Prompts

Create a dataset of input-output pairs. Each prompt should be specific and lead to a consistent, relevant response.

Example format:

{"prompt": "Write a blog intro about solar energy", "response": "Solar energy is transforming how we power our world..."}

3. Choose the Right Model & Framework

Start with pre-trained models like GPT-Neo, Falcon, or LLaMA 3. Use Hugging Face’s Trainer API or Google’s T5 training scripts.

4. Fine-Tune with Your Custom Dataset

Use tools like LoRA or PEFT (Parameter-Efficient Fine-Tuning) to reduce cost and resources during training.

accelerate launch train.py --train_file=mydata.jsonl --model_name=gpt2 --epochs=3

5. Test and Validate

Feed new prompts into the model and compare outputs against your expectations. Adjust data and parameters as needed.

6. Deploy Your Model

Use platforms like Hugging Face Spaces, Streamlit, or custom APIs to make your model accessible to users or applications.

✍️ Prompt Engineering Tips for Better Results

- Use descriptive, goal-driven prompts

- Avoid ambiguity and jargon

- Add examples and constraints to guide behavior

- Test variations and iterate often

💡 Pro Tip: Document successful prompt styles so you can reuse them across use cases or domains.

📈 SEO Optimization When Publishing AI-Enhanced Content

To ensure your AI-enhanced output ranks well:

✅ Title your pages clearly: “AI Model Trained for Legal Writing Prompts”

✅ Use keyword-rich metadata and alt text: “Custom-trained GPT model for SEO-friendly blog generation”

✅ Focus on long-tail keywords: “Train your own AI chatbot using JSONL prompts and LoRA fine-tuning”

✅ Add structured FAQs and schema markup to improve visibility on Google SERPs

🧠 Final Thoughts

Training your own AI model using custom prompts gives you creative control, output consistency, and domain-specific accuracy. Whether you’re building chatbots, content engines, or industry-specific assistants, fine-tuned AI helps you stand out in an increasingly crowded digital space.