Introduction

In 2025, AI-powered voice cloning scams have surged to alarming levels, targeting individuals, businesses, and even government officials. With just a few seconds of audio, scammers can replicate voices with chilling accuracy—leading to financial loss, identity theft, and emotional trauma. This article explores how voice cloning fraud works, real-world cases, and how to protect yourself in the age of synthetic speech.

🎙️ What Is Voice Cloning Fraud?

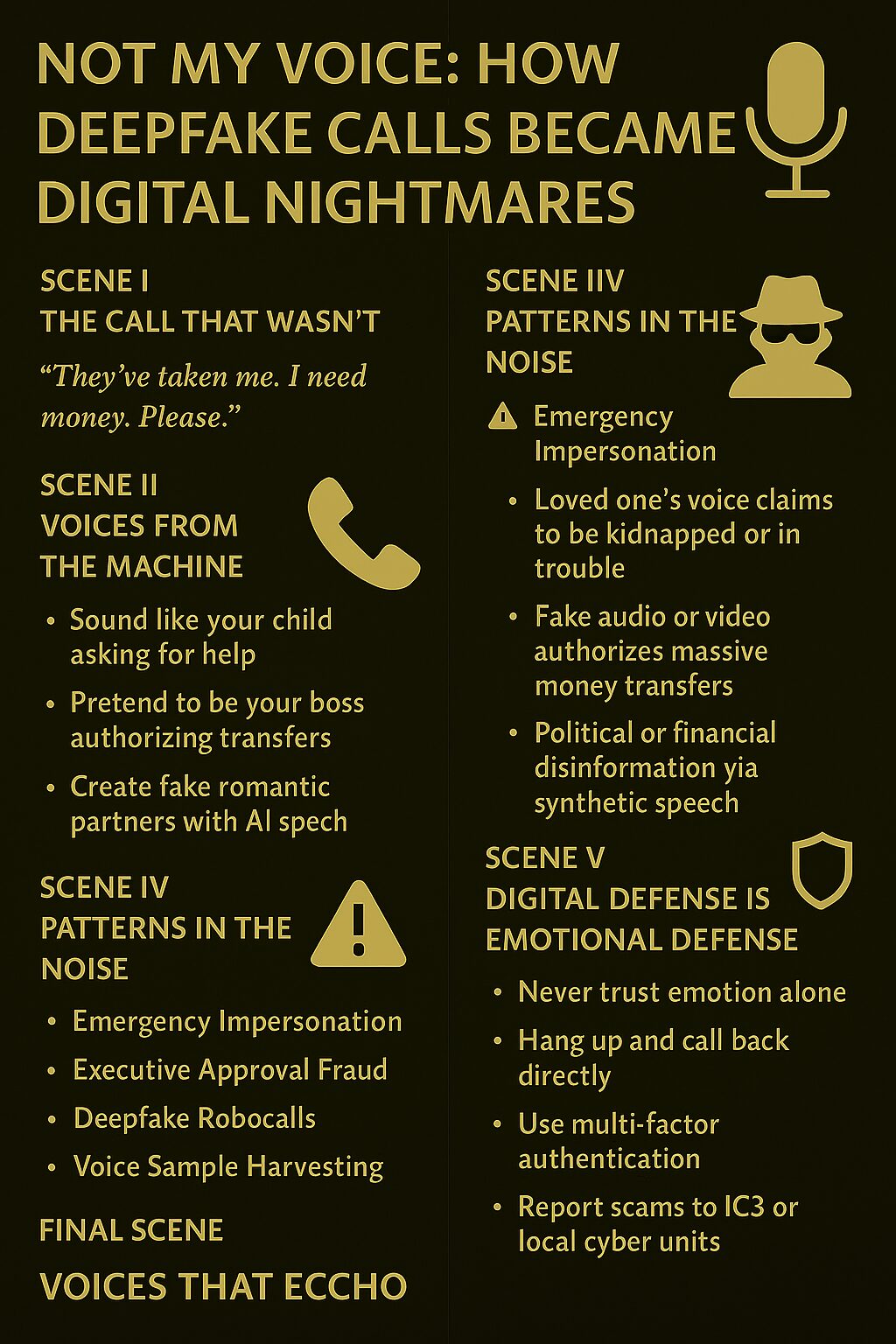

Voice cloning fraud involves using AI algorithms to replicate a person’s voice from short audio samples. These clones are then used to:

- Impersonate loved ones in distress

- Mimic executives to authorize fake transactions

- Trick victims into revealing sensitive information

- Create deepfake robocalls or phishing messages

The technology is now so advanced that cloned voices are virtually indistinguishable from real ones.

🚨 Real-World Cases in 2025

🧑⚖️ Political Impersonation

Scammers used AI to impersonate U.S. Secretary of State Marco Rubio, contacting foreign ministers and officials via Signal to extract sensitive data.

👨👩👧👦 Family Emergency Scams

Victims received calls from cloned voices of children or spouses claiming to be kidnapped or in danger. One woman nearly wired thousands after hearing her husband’s voice pleading for help.

🏢 Corporate Deepfake Attacks

A finance worker transferred $25 million after attending a fake video call with deepfaked executives.

🧓 Elderly Targeted

An elderly man in India lost ₹1 lakh after a scammer cloned his relative’s voice and requested urgent medical funds.

📈 Why Voice Cloning Scams Are Rising

- Accessible Tools: Free or low-cost voice cloning apps are widely available

- Social Media Exposure: Public audio clips from TikTok, YouTube, and podcasts are easy to scrape

- Emotional Manipulation: Scammers exploit urgency, fear, and trust

- Lack of Regulation: Most voice cloning platforms lack consent safeguards

🧠 How Scammers Use Voice Cloning

| Tactic | Description |

|---|---|

| Family Distress Calls | Fake emergencies using cloned voices of loved ones |

| Silent “Hello” Calls | Capture voice samples for future cloning |

| Romance Scams | AI chatbots and voices build fake relationships |

| Business Impersonation | Mimic executives to authorize payments |

| Extortion with Fake Evidence | AI-generated confessions or threats to coerce money |

🔐 How to Protect Yourself

✅ Verification Tips

- Use family code words for emergencies

- Always call back using known numbers

- Be skeptical of urgent requests from familiar voices

- Avoid sharing voice clips publicly

✅ Technical Safeguards

- Enable multi-factor authentication on accounts

- Use identity protection services with dark web monitoring

- Avoid logging into sensitive accounts on public Wi-Fi

✅ Reporting & Recovery

- Report scams to the FBI’s Internet Crime Complaint Center (IC3)

- Contact your bank and credit bureaus immediately

- Change passwords and monitor accounts for suspicious activity

📈 SEO Tips for Voice Cloning Scam Awareness

✅ Search-Friendly Titles

- “Voice Cloning Scams in 2025: How AI Is Fueling Fraud”

- “How to Spot and Prevent AI Voice Impersonation Attacks”

✅ High-Impact Keywords

- “AI voice cloning scam examples”

- “voice phishing and deepfake fraud 2025”

- “how to protect against voice cloning attacks”

✅ Metadata Optimization

- Alt Text: “Infographic showing how AI voice cloning scams work and how to prevent them”

- Tags: #VoiceCloningScam #AIPhishing2025 #SyntheticSpeechFraud #DeepfakeSecurity #AIImpersonation

Final Thoughts

Voice cloning scams are no longer science fiction—they’re a daily reality. As AI tools become more powerful and accessible, the risk of fraud grows exponentially. Staying informed, verifying identities, and limiting your digital voice footprint are essential steps to protect yourself and your loved ones.