Introduction

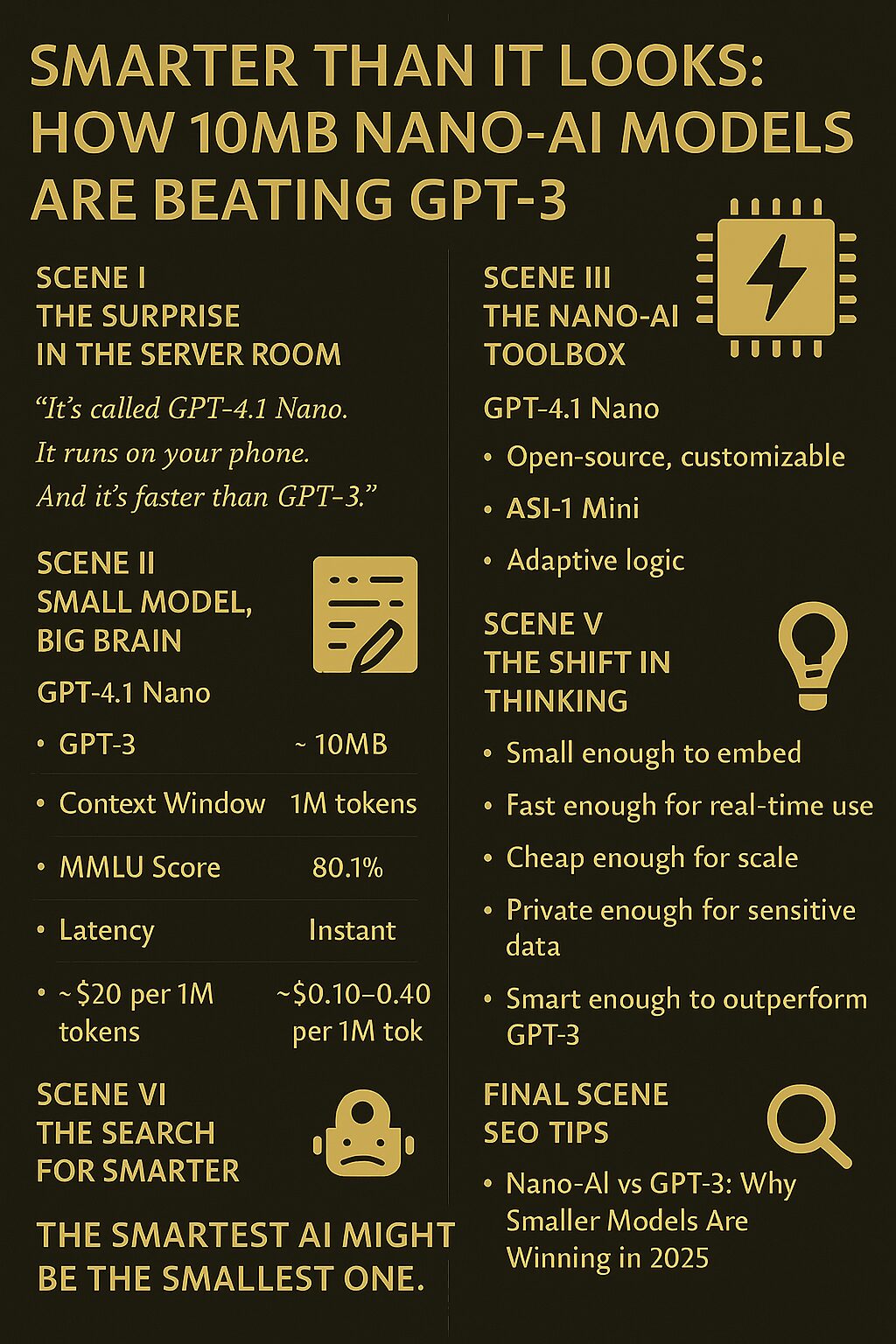

In 2025, the AI landscape is being reshaped—not by bigger models, but by smaller, smarter ones. The rise of Nano-AI models, some as small as 10MB, is challenging the dominance of legacy giants like GPT-3. These ultra-efficient models are proving that size isn’t everything—and in many cases, they’re outperforming GPT-3 in speed, cost, and task-specific accuracy.

This article explores the Nano-AI revolution, highlights top-performing models, and explains why developers and businesses are embracing compact intelligence.

⚡ What Is Nano-AI?

Nano-AI refers to lightweight language models optimized for performance, speed, and cost-efficiency. These models typically range from 5MB to 50MB, and are designed for:

- Real-time inference

- Edge deployment

- Low-latency tasks like classification, autocomplete, and embedded AI agents

Examples include GPT-4.1 Nano, MiniGPT, and NanoGPT, which deliver impressive results despite their compact size.

🧠 Nano Models vs. GPT-3: Feature Comparison

| Feature | GPT-3 (175B) | GPT-4.1 Nano (10MB) |

|---|---|---|

| Model Size | ~350GB | ~10MB |

| Context Window | 4K tokens | 1M tokens |

| MMLU Score | ~70% | 80.1% |

| GPQA Score | ~40% | 50.3% |

| Latency | High | Ultra-low |

| Cost per 1M Tokens | ~$20 | ~$0.10–$0.40 |

| Deployment | Cloud only | Edge, mobile, cloud |

Nano models are faster, cheaper, and more flexible, making them ideal for embedded systems and high-volume applications.

🔍 Why Nano-AI Is Disrupting the Industry

- 🧩 Efficiency: Small models require less compute, enabling deployment on mobile devices, IoT, and edge servers

- 💸 Affordability: Token costs are up to 100× lower than GPT-3 or GPT-4

- ⚙️ Speed: Instant responses with minimal latency

- 🧠 Surprising Accuracy: Outperform GPT-3 in benchmarks like MMLU and GPQA

- 🔐 Privacy-Friendly: Can run locally without sending data to cloud servers

🚀 Top Nano-AI Models in 2025

1. GPT-4.1 Nano

- Released April 2025 by OpenAI

- 1M token context window

- Scores 80.1% on MMLU, 50.3% on GPQA

- Ideal for classification, autocomplete, and embedded agents

2. NanoGPT (by Andrej Karpathy)

- Lightweight GPT-2-style model

- Open-source and customizable

- Perfect for training on small datasets

3. ASI-1 Mini

- Adaptive reasoning with dynamic modes

- Excels in multi-step logic and decision-making

- Used in mobile apps and real-time analytics

🧪 Use Cases for Nano-AI

| Industry | Nano-AI Application |

|---|---|

| Healthcare | On-device symptom checkers and triage bots |

| Finance | Real-time fraud detection and transaction scoring |

| Retail | Smart kiosks and embedded customer assistants |

| Education | Offline tutoring apps with personalized feedback |

| IoT & Edge Devices | Voice assistants, predictive maintenance, alerts |

📈 SEO Tips for Nano-AI Content Creators

✅ Search-Friendly Titles

- “Nano-AI vs GPT-3: Why Smaller Models Are Winning in 2025”

- “Top 10MB AI Models That Outperform GPT-3”

✅ High-Impact Keywords

- “GPT-4.1 Nano performance benchmarks”

- “NanoGPT vs GPT-3 comparison”

- “Best lightweight AI models for edge deployment”

✅ Metadata Optimization

- Alt Text: “Comparison chart showing Nano-AI models outperforming GPT-3 in speed, cost, and accuracy”

- Tags: #NanoAI #GPT41Nano #LightweightLLMs #AIRevolution2025 #EdgeAIModels

Final Thoughts

The Nano-AI revolution proves that intelligence doesn’t need to be massive. With models like GPT-4.1 Nano outperforming GPT-3 in key benchmarks, the future of AI is not just smarter—it’s smaller, faster, and everywhere.

💬 Want help selecting the right Nano-AI model for your app, device, or business workflow? I’d be happy to guide you—byte by byte.