Introduction

As AI video tools continue to evolve, creators now seek custom-trained models that reflect their unique style, brand tone, and creative goals. While platforms like Runway ML, Pika Labs, and Stable Video Diffusion (SVD) offer excellent pre-trained models, training your own model can unlock unprecedented personalization in video generation.

In this guide, we’ll walk you through the key steps to train custom AI models, list tools and platforms to use, and provide SEO optimization strategies to help your personalized videos gain visibility.

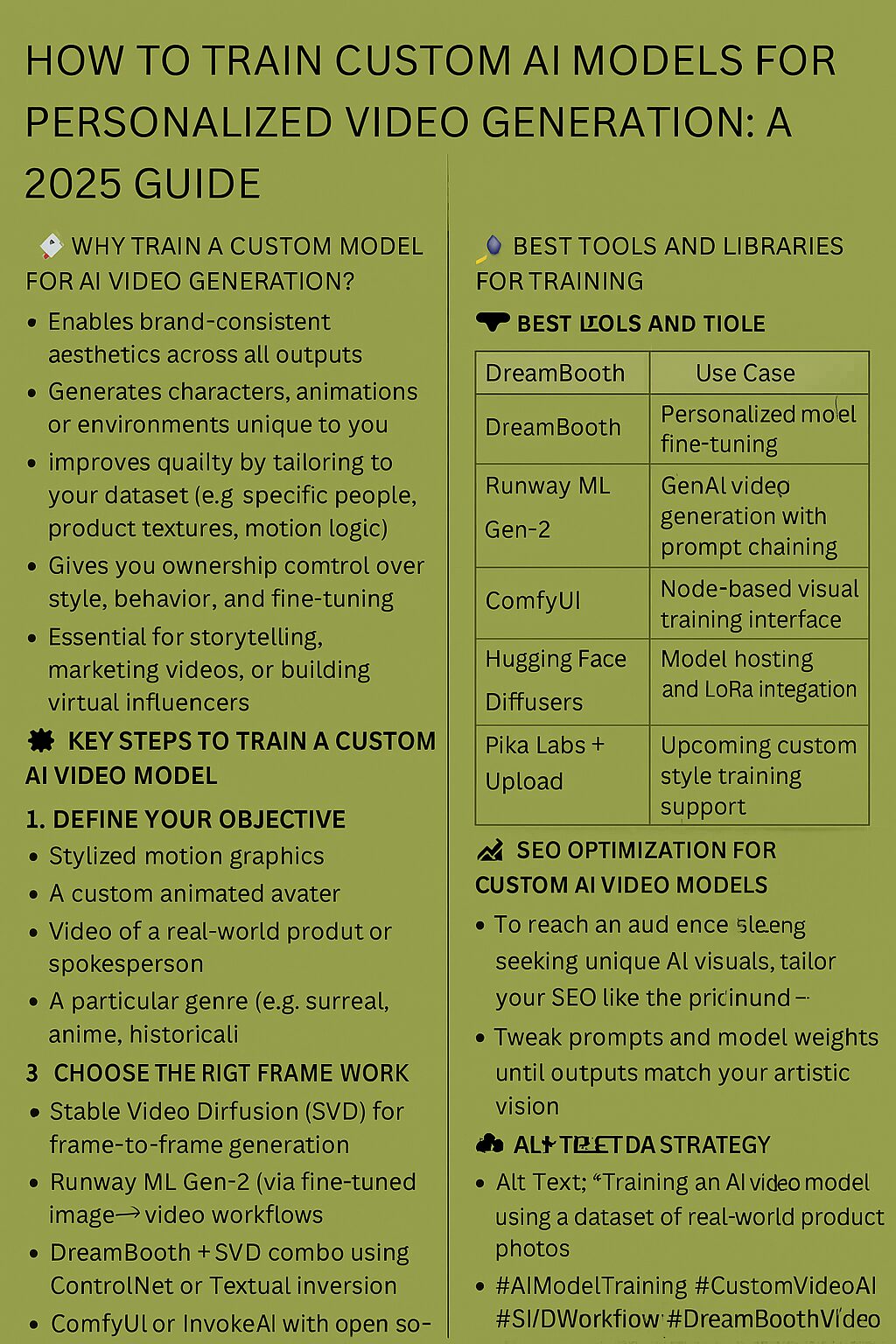

🚀 Why Train a Custom Model for AI Video Generation?

- Enables brand-consistent aesthetics across all outputs

- Generates characters, animations, or environments unique to you

- Improves quality by tailoring to your dataset (e.g., specific people, product textures, motion logic)

- Gives you ownership and control over style, behavior, and fine-tuning

- Essential for storytelling, marketing videos, or building virtual influencers

🧠 Key Steps to Train a Custom AI Video Model

1. Define Your Objective

Decide what your model will generate:

- Stylized motion graphics

- A custom animated avatar

- Video of a real-world product or spokesperson

- A particular genre (e.g. surreal, anime, historical)

2. Gather and Prepare a Dataset

- Collect 100–2,000+ high-quality images or video clips

- Use diverse angles, lighting, and expressions for realism

- Annotate or tag your data if fine control is needed

3. Choose the Right Framework

Select a platform that supports custom training:

- Stable Video Diffusion (SVD) for frame-to-frame generation

- Runway ML Gen-2 (via fine-tuned image → video workflows)

- DreamBooth + SVD combo using ControlNet or Textual Inversion

- ComfyUI or InvokeAI with open-source LoRA support

4. Fine-Tune the Model

- Use cloud platforms like Google Colab, AWS EC2, or RunPod

- Apply fine-tuning methods like LoRA, DreamBooth, or Textual Inversion

- Run multiple epochs (usually 1–5) while adjusting learning rates

- Validate with sample outputs and iterate for style alignment

5. Test with Custom Prompts

Generate test clips using scene-based prompts: > “Model walking through neon-lit alley, long coat flaring, camera following from behind, cinematic cyberpunk tone.”

Tweak prompts and model weights until outputs match your artistic vision.

🛠️ Best Tools and Libraries for Training

| Tool / Platform | Use Case |

|---|---|

| DreamBooth | Personalized model fine-tuning (stable diffusion) |

| Runway ML Gen-2 | GenAI video generation with prompt chaining |

| ComfyUI | Node-based visual training interface |

| Hugging Face Diffusers | Model hosting and LoRA integration |

| Pika Labs + Upload | Upcoming custom style training support |

📈 SEO Optimization for Custom AI Video Models

To reach an audience seeking unique AI visuals, tailor your SEO like this:

✅ Search-Optimized Titles

- “How to Train Your Own AI Video Generation Model in 2025 (Step-by-Step Guide)”

- “Fine-Tune AI Models for Personalized Video Content Using DreamBooth or LoRA”

✅ Long-Tail Keywords

- “Custom AI video generation model with branded aesthetic using Runway ML”

- “How to train a personalized animation style with SVD and ControlNet”

✅ Smart Metadata Strategy

- Alt Text: “Training an AI video model using a dataset of real-world product photos”

- Tags: #AIModelTraining #CustomVideoAI #SVDWorkflow #DreamBoothVideo #PersonalizedAIVisuals

Final Thoughts

Training your own AI model for video is like handing your creative brain a cinematic engine. It takes effort, but the reward is full creative control, brand consistency, and visual innovation.

💬 Want help building your training dataset, choosing the best model architecture, or designing prompt decks for your custom engine? I’d be glad to help script your model’s first cinematic scene—pixel by pixel.